Mailing Address

Lab: CASE 261 MMC Campus FIU

11200 SW 8th St,

Miami, FL 33199, USA

You can also find us on the map below.

Phone

Email (best way to reach us)

Laboratory, Department, and University

Located in Miami, FIU is Florida’s fastest growing public research university R1 - Very High Research Activity - University, the fourth largest university in the nation, and one of the nation’s largest Hispanic-Serving Institutions.

Our laboratory is part of the Knight Foundation School of Computing and Information Sciences (KFSCIS) at FIU. FIU is ranked as #46 in public universities (#98 overall) in the US. NSF ranks FIU 39th nationwide in externally-funded research expenditures and KFSCIS FIU R&D expenditures is ranked at 54 in the US and is climbing. More information about FIU program ranking can be found at this link.

The KFSCIS School has 35 tenure-track faculty members and over 2,000 students, including over 90 Ph.D. students. The School is engaged in on-going and exciting new and expanding programs for research, education and outreach. The School offers B.S., M.S., and Ph.D. degrees in Computer Science, and M.S. degrees in Telecommunications and Networking, Cyber-security, and Information Technology as well as B.S./B.A. degrees in Information Technology. For more information, visit https://www.cis.fiu.edu/

Laboratory Space

Saeed Lab is located on the 2nd floor of FIU’s CASE building – CASE 261 which can accommodate 12 personals and has conference space and facilities.

Dr. Saeed has an independent dedicated lab space (approx. 1000 sq. ft) that is used by the students for research purposes. The lab carrels are equipped with desktop systems, machine with multicore processors and HPC servers with GPU’s whenever necessary. Further, the students have access to printers, storage devices, computer networking infrastructure, multiple conference spaces for collaborations, and essential research access (such as research paper and books). The students have access to multiple compute infrastructure needed to complete their projects.

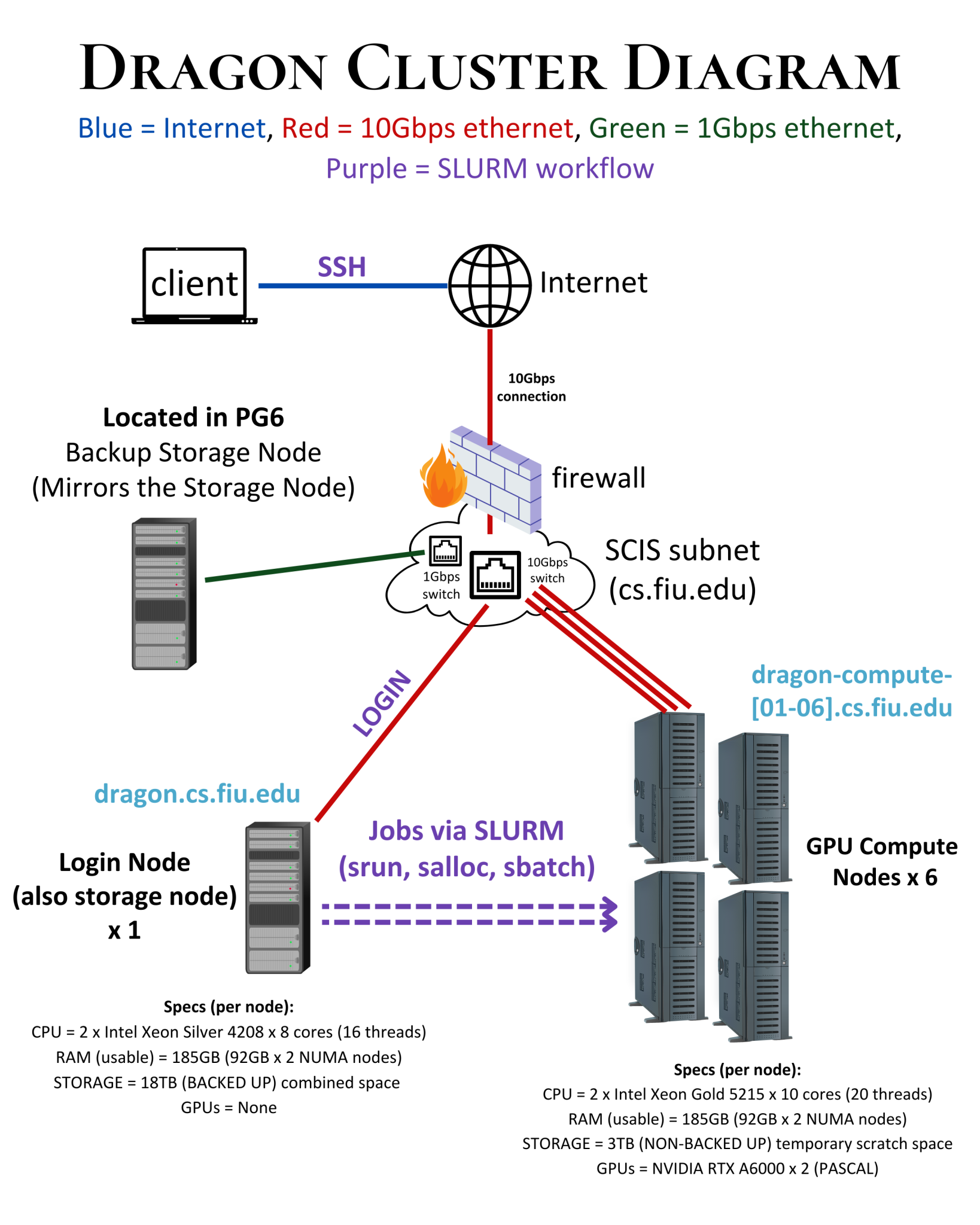

The School of Computing and Information Sciences (SCIS) maintains a data center, research and instructional labs, and computer classroom facilities. These facilities are housed in the Engineering and Computer Science (ECS) and PG6 Tech Station (PG6) buildings on the Modesto A. Maidique Campus located in Miami, Fl. The facilities are maintained by a dedicated professional IT support staff as noted in the staffing section below. The School provides computing services such as file, compute, web, email, messaging, backup, print, and other computing services. Our networking services include a 10 Gigabit Ethernet core network that interconnects rack mounted switches and servers. All school desktop systems are connected by 1 Gigabit switched ports. Our network is highly redundant with multiple fiber and copper paths and is designed with routing fail-over capacity. We provide automated monitoring of our network and servers 24×7. The building subscribes to the university 802.11 WiFi network and SCIS maintains some research WiFi networks. Our network interconnects at 10GBs to the campus backbone, which provides a 40GBs connection to the NAP of the Americas to provide for connections to Internet, Internet2, Florida and National Lambda Rail, and CLARA (South American Research) networks. Our systems feature a variety of open source, commercial development and scientific software products from numerous vendors including IBM, Microsoft, ESRI, MathWorks, etc. We provide middleware technologies to support web services.

Local Lab HPC workstation

This workstation is a local workstation that is available to us. This workstation is primarily used for MPI/OPENMP/CUDA development and consists of 2 Intel® Xeon® Gold 6338 Processor (48M Cache, 2.00 GHz) with 32 cores for each processor. Further, 256GB of RAM, and 10TB of hard drive is available to this system. The workstation is also equipped with NVIDIA TITAN Xp GPU.

Local FPGA workstations

These servers are equipped with Intel Xeon processors and Intel DE-5 FPGA’s (Intel grant). Our servers are equipped with Intel(R) Xeon(R) Gold 6152 CPU @ 2.10GHz with 22 cores each, and there are 2 processor on each server. with Intel Xeon processors with TITAN Xp Nvidia GPU’s (NVIDIA grant) and Intel DE-5 FPGA’s (Intel grant). Our servers are equipped with Intel(R) Xeon(R) Gold 6152 CPU @ 2.10GHz with 22 cores each, and there are 2 processor on each server. Also the server is equipped with 128GB of RAM, and 10TB or hard-drive. The Terasic DE5-Net Stratix V GX FPGA is an ideal hardware solution for designs that demand high capacity and bandwidth memory interfacing, ultra-low latency communication, and power efficiency. The Stratix® V GX FPGA features integrated transceivers that transfer at a maximum of 12.5 Gbps, allowing the DE5-Net to be fully compliant with version 3.0 of the PCI Express standard, as well as allowing an ultra-low-latency, straight connections to four external 10G SFP+ modules. For designs that demand high capacity and high speed for memory and storage, the DE5-Net delivers with two independent banks of DDR3 SO-DIMM RAM, four independent banks of QDRII+ SRAM, high-speed parallel flash memory, and four SATA ports. The feature-set of the DE5-Net fully supports all high-intensity applications such as low-latency trading, cloud computing, high-performance computing, data acquisition, network processing, and signal processing. Our basic code design, development, and testing will be done on these machines. This machine will allow us to do our preliminary parallel algorithms with single machine for scalability analysis. Also the server is equipped with 128GB of RAM, and 10TB or hard-drive. Our basic code design, development, and testing will be done on these machines. This machine will allow us to do our preliminary parallel algorithms with single machine for scalability analysis. For multiple nodes with FPGA’s, we will acquire access to different national infrastructures

Local HPC (GPU equipped) Cluster Resources – (Dragon Cluster)

Dedicated HPC cluster is available to Saeed’s lab – consisting of memory distributed architecture equipped with multicore and GPU nodes. This cluster consists of 12 nodes where each node is equipped with 2 Intel Xeon Scalable Gold 6248, 2.5GHz (20-Core each), 12 x 32GB PC4-25600 3200MHz DDR4, 2 x Intel 1.6TB SSD. Further each node is equipped with 2 NVIDIA Quadro RTX 6000 GPU’s. All of these can be used for running our preliminary scalability experiments and results. We maintain an internal document on how to use Dragon Cluster which can be accessed here: https://saeedwiki.cis.fiu.edu/2022/06/03/how-to-use-slurm-on-dragon-cluster/

NSF XSEDE (GPU Equipped) supercomputers (Current PI Saeed award # TG-CCR150017) such as XSEDE Comet, Blue Waters (Cray XE/XK), FRONTERA (Intel “Sky Lake” Xeon processor/DDR-4/SSD HD) which can be accessed by research groups through call for allocations for compute-time and storage. Systems such as this will be ideal for scalability studies of the proposed algorithms and peta-scale results will be reported. We have additional award from XSEDE Supercomputing facility (Award # TG-ASC200004) which includes Comet-Supercomputers which is a homogenous computing facility with similar multicore, and manycore processors available. These include Intel Xeon E5-2680v3 processors, with 1728 processor cores, 72 nodes, 8TB of memory, 286 GB of memory per node, and 884 TFlops of peak-performance. We currently have access to 100,000 hours of compute time which is also extendible via extensions, and renewals. In addition to this we similar access to supercomputing machines which have a GPU attached to them. The Comet cluster consists of 1944 compute nodes where each node is equipped with 2 sockets 12 cores of Intel Xeon E5-2680v3 processor, 2 NUMA nodes 64GB DDR4 DRAM, compiler: GCC 7.2 running CentOS. The compute nodes are interconnected through a 56 Gbps FDR InfiniBand network. The allowed maximum number of nodes per job is 72 and the allowed maximum runtime per job is 48 hours.

Design and Implementation

This site was forked from Dr. Allan Drummond’s lab website Dammond Lab, which in turn took a great deal of inspiration from Dr. Travis Bedford’s lab website Trevor Bedford. We have modified a lot of the code, imported some features from NBCLab but the core codebase is still largely taken from the Drummond lab’s original repository. The code was openly shared on GitHub under the MIT license, so please feel free to adapt it for your own purposes. However, if you do use any of the code, please remember to cite the code to Dr. Drummond and to link back to Dammond Lab. The site started with Jekyll Bootstrap, is deployed using GitHub Pages, which combines source-code control and hosting in a most pleasant way. Modify, test locally, push, and it’s live.

This site’s source code is freely available on GitHub at Saeed Lab Github. All code is placed under the MIT license. You’re welcome to borrow / repurpose code to build your own site, and if you do, I’d appreciate attribution and a link back here.